These are notes and my expanded thoughts — not a comprehensive summary of the book. I recommend buying the book if you want the context in which these notes were written.

Key Ideas

If you don't read any further just remember these key ideas I made for myself:

- I need to separate out the lucky breaks from consciously made decisions. And when thinking back on experiences I must keep in mind the outcome quality != the quality of the decision that I put into it. (Outcome bias).

- Ideally I can put down all of my predicted outcomes before I make a decision.

- Focus on the surprising outcomes, e.g. those I didn't even think of. I should prioritize digging into not just the estimates but outcomes which were not even listed as possible. The most informative lessons are found in highly unexpected outcomes.

- Remember uncertainty can arise from imperfect information or just luck. If I can afford to spend time, money, etc. to receive information that helps reduce my estimates/uncertainty, I should do so up until can no longer afford or spend time on it. ("aleatory and epistemic uncertainty")

- Deliberately seek the outside view by asking others for their opinion on what decision and outcomes are possible. Incorporate their view points independently and prior to me ever revealing my own.

- Even if just rough estimates, guess outcome payoffs and probability of occurring. Sort in descending payoffs, the various outcomes and decisions, weighing downside risk and rewards across outcomes. Add enough detail per outcome to answer the question of "whats my expected value and whats my downside Risk" between decisions.

- If I want to ground myself in mathematical formalisms, or automate this I should probably look to the reinforcement learning literature as most of what is discussed could be stated concisely as, "Sequential Decision Making under uncertainty".

Should you Buy the Book?

TL;DR Yes, if you want an answer to the following question, "How do I create a high quality decision making process for myself and how do I improve said process over time?".

For me, I see the tools contained in the book, helping to maximize the knowledge I can learn from individual decisions and experiences. In other words, the book contains tools to:

- Reduce cognitive biases. ie. overconfidence, hindsight, confirmation, and outcome biases (In the book this is blanketed by the concept of "resulting").

- Separate out (un)lucky outcomes from actual skill determined outcomes.

- Maximize the information I can gleam from others willing to help me.

Podcast for those Auditory Learners

Before I get to my notes, I will mention I first heard Annie at a company conference a while back, and most recently heard her on the a16z Podcast: How to Decide, Convey vs. Convince, & More. And unlike most podcasts, it was good enough that if I zoned out, I had to jump back and make sure I didn't miss anything. So if your an auditory learner, I recommend you listen here as well.

And I have to admit the podcast did its job as marketing material for Annie, as I ultimately felt intrigued enough to buy the book. In particular I wanted to see the diagrams around outcome quality vs decision.

After reading, I discovered that yes, there is a bit more depth contained in the book, but its also a workbook meant to be filled in, with exercises throughout each chapter. I.e "From the past year write down.. blah, blah. Now reflect on how that outcome .... blah, blah". The exercises could be answered mentally, e.g. just thinking about the prompt and not filling it in. With that said, I still found it useful to fill out and see what I wrote from past reading sessions.

I think compared to other books, most of my notes were not directly from the exercises, nor did I pull many quotes from the book. Instead most of my notes are expansions of my own ideas induced by the plain language and checklist-esque articulation of Annie's writing style. So if your thinking the notes follow the same chronological order of the book, you are going to be disappointed.

Instead the notes are scattered ideas and topics spurred from the podcast, book, etc.

Notes

Hindsight Bias aka "Resulting"

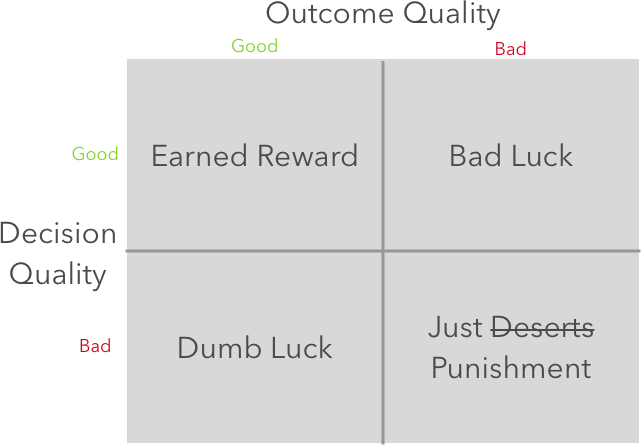

Resulting or hindsight bias is where we use the quality of the outcome to drive our opinions on the quality of the underlying decision. This is a fallacy.

Take these two scenarios i.e. (The Decision -> followed by an outcome):

- Ran red light -> made it just fine.

- Went on green -> got in a crash.

After the events unfolded, and looking back, we would be succumbing to the bias if:

- In the first scenario, we ended thinking the decision was a good.

- Or in the second thinking the decision bad.

In other-words the first is dumb luck, and the second is bad luck.

In the book there is this graphic visually showing how the the combined decision and outcome can be categorized. For this particular stop light scenario it's easy to categorize the scenarios for they are contrived in both the difficultly of decision and the timeframe in which the outcome is observed.

In business or long scenarios its important to remember this fallacy as the decisions required are far more complex and outcomes usually take a long time to materialize. If your familiar with Reinforcement Learning, this is similar to the credit assignment problem from that domain.

In 500BC Herodotus basically described the Bad Luck and Dumb Luck quadrants as: "A decision was wise, even though it led to disastrous consequences, if the evidence at hand indicated it was the best one to make; and a decision was foolish, even though it led to the happiest possible consequences, if it was unreasonable to expect those consequences"

Sounds a lot like Reinforcement learning

The book is big on better ways of learning from experience, identifying repeatable experiences versus one-offs, calculating downside risk based on current knowledge, etc. It often skirts around many of the same ideas and problems found in RL.

Take for example the temporal credit assignment problem. Its defined by how the agent receives delayed rewards thus making it hard to know which of the past actions or states had the largest impact on reward. This is doubly hard when the environment is stochastic, e.g. luck plays a role.

Getting philosophical, what are we, but sophisticated thinking agents making abstract decisions repeatedly in the world, getting back noisy rewards over time. E.g. money, happiness, etc..

Another theme of RL is in the Exploration-exploitation tradeoff, where an RL agent can't observe the rewards resulting from actions it did not take, e.g. the counterfactual rewards. In RL the agent needs to explore in order to learn. This is hard to do efficiently.

In the book there is a whole chapter, "The Decision Multiverse" which places emphasis on listing out the counterfactual outcomes before an actual decision and outcome is realized.

The point being made is that if we do this, we prevent ourselves from forgetting or pruning all the other possible outcomes which could have occurred. Those counterfactuals are needed to see if we (mis)predicted what our decision would result in. And we need all the outcomes to compare the realized outcome payoff versus all the others we hoped predicted.

Individual events can lead myself astray

Any individual experience could incorrectly convey a lesson which does not hold more generally. ie. perhaps it was just luck that particular time. Without multiple tries at a decision, it's hard to tell from a single experience which only happens once or twice if the outcome was truly swayed by a conscious decision made, or if it was luck of some kind.

However in aggregate, the repeated experiences will average out allowing myself to compare and see the differences across those experiences. Such repeated tries, ultimately add up to give more confidence that my actions are what determined a given outcome. This sounds a lot like how in RL we average experiences in rollouts to reduce variance.

Outcomes, Pruning, and Decision Trees

A goal of the decision making process is to accurately record the decisions and outcomes consciously prior to any realization of them. Thats why we leverage tools to help proactively setup ourselves, so after the fact we can reconstruct the decisions, state of the world, knowledge, etc.

Common tools to help collect all relevant knowledge prior and at the moment of a decision:

- In business and finance - Investment memo

- Software Engineering - A requirements or engineering design Doc (PRD/ERD)

- In general, sequential decision trees.

To bias after the fact is to ignore the possible outcomes which could have occurred.

- TODO: insert Before and After decision trees, where the leaves are pruned after the fact due to our selective memory. e.g. "It was inevitable".

- TODO Diagram for Beliefs(priors) -a-> leading to decisions -b-> leading to outcomes. Show how the a transitions is stochastic due to the imperfect information we have, and how b is potential a source of general randomness e.g. luck.

Unexpected Outcomes are Worth Reflecting Deeper

Its important to remember the most informative aspect of the data collected before and after, is in how unexpected the outcomes turned out to be. This is the delta between what was predicted and the actual outcome.

Payoffs are usually easiest to assign and compare if a dollar $ amount is attached to them. However the unit used for outcome payoffs could be anything which a numerical value can be assigned.

- health metrics

- personal happiness

- self-improvement

Quick Decisions may not need the full calculation of payoff or sorting of different outcomes

Its actually more often that I will encounter a decision which does not require much analysis. I can preempt or short circuit the work required when listing outcomes and assign probabilities by using the "happiness test". E.g. when deciding what Netflix title to watch, or common dishes at a restaurant.

I could test the work needed by asking and answering myself the following prompt:

prompt("How often will you be able to make {{decision}} in the {{time duration}}"):

case {{time duration}} > threshold // e.g. "weekly" > 5

// ignore

case {{time duration}} <= threshold // e.g. == "yearly < 1

// do work

prompt("Whats the worst {{payoff unit}} that could happen"?):

case {{payoff unit}} >= threshold // e.g. $0:

// ignore

case {{payoff unit}} < -$150:

// do workOr if I already made a decision, some time has passed and I want to learn if the work done was worth it. Or I get lazy and I just want to use some arbiratry scale I internally keep:

prompt("On a scale of 1 to 5 how much of an affect did {{decision}} make on

case {{scale}} <= 3:

// ignore

case {{scale}} >3:

// do workI would prompt myself when contemplating a decision, but before I do the hard work of enumerating, sorting and assigning payoffs to outcomes. In the cases implying small payoffs with little to no downside risk, I would not waste time, and just act.

Dr Evil Game and Exception context based decisions

E.g. the small isolated decisions of little value, but if done in aggregate lead to large negative outcomes.

"Small individual decisions that are individually justifiable but in aggregate will cause future negative outcome.

Preventing luck from dominating outcomes, Hedging, allocates payoff units in advance. This hedging is done for the hope that if used during a bad luck scenario, it could prevent said bad outcome, or at-least minimize it.

App idea cont. Suggest creating a pre-commitment contract for decisions which have a small individual negative payoff, but which occur multiple times. Collect counts of decision and trigger donating to some unpleasant friend, charity or group if threshold crossed.

Prospective Hindsight

Setting the final outcome, and imagining what ways you could have arrived at that outcome.

Focusing on the future scenarios when you failed and looking back is the premortem. Here is how I see the steps:

- List the positive goal or outcome.

- Set a time frame for the goal.

- Set a failure outcome at some future point in time (timeframe + t+1).

- Enumerate the decisions which if made still lead to the failure outcome.

- Enumerate the uncertainty driven reasons (e.g. luck) on why that outcome failed.

The Backcast vs premortem => is the same up to the point in which the outcome payoffs are determined. Backcast == "successfully outcomes" while premortem == "failure outcomes". So to backcast, its the same steps above, but the difference being now I would focus on positive outcomes.

TODO diagram for the skill based actions vs the outside events e.g. luck, and how those map to the success outcomes (backcast) and the failure outcomes (premortem). Backcasting is easy to generate, its fun to think of ways succeeding. But is hard to define the potential failures, then say with premortem's, which are harder but ultimately more useful in the decision making process.

Pre-committing

Pre-committing to decisions, is forcing subsequent sequential decisions which: require effort, or are unpleasant, or just hard to be motivated about.

"Ulysses contracts" where one sets and automatic behavior regardless of the pull or tempting scenario. There is two ways to set them up, either:

- Raising barriers to prevent ones-self from making known poor future decisions.

- Reducing friction to executing actions having good payoffs but require lots of willpower or resources.

Outside views

Gathering an outside view is crucial to breaking out of our own biases. It gives better estimates and uncovers outcomes where were overlooked. However the order in which I gather versus tell others about my beliefs matter.

Contagion

Beliefs are contagious therefore I should try and seek out and ask others of their beliefs first, before I go espousing my own. If I was to tell others my beliefs first, I will more likely hear my own ideas being agreed with and just repeated back. This hearing what I already know is due to how we as humans tend to normalize, agree and follow along.

If I hold inaccurate beliefs the quality of any decision informed by that belief will suffer.

Outcomes are also subjects of contagion, keep them hidden and not revealed to others if I am to avoid biases the outcomes they themselves generated.

To get high quality feedback I need to setup and provide good, detailed contextual knowledge to the other person I'm expecting to generate outcomes. I need that person to be able to see how the state of the world is, giving them the due diligence I did to set my own knowledge up.

The only way someone can know they are disagreeing with me is if they know what I'm thinking first. Therefore keeping my opinions as follow up topics when eliciting feedback or thoughts makes it more likely I will hear what the actually believe for themselves.

If feedback or opinions can be asked from an anonymous source, that anonymity could help reduce the halo effect. The halo effect is where a persons authority or standing is taken with more weight effectively biasing the feedback given back to align less with reality,

Negative Thinking

"Think positive but plan negative" ... "Don't confuse the destination with the route"

Its easy to come up with lots of examples where I had strong beliefs in the past, I now have reconsidered to be wrong or indefensible. Contrast that with the harder task of predicting which beliefs I hold now, which will fundamentally be changed so much so I'll have to rescind said beliefs.

"Imagining you might fail is not going to make failure materialize, contrary to so many of those "positive thinking" only books."